Dify.ai Review 2025: Build & Operate AI Apps with Drag‑Drop LLMOps

Summary

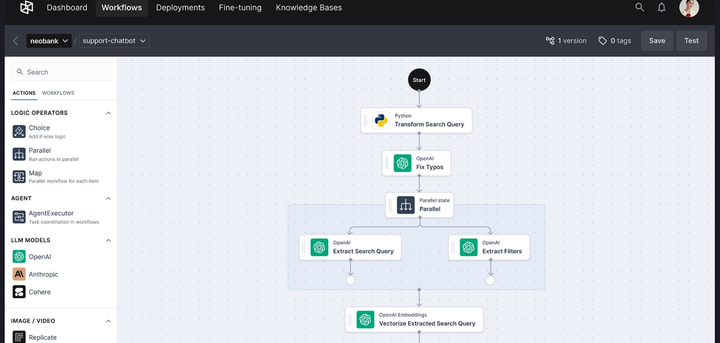

Dify.ai is an open-source LLMOps platform designed to help you build, deploy, and manage AI-powered applications—chatbots, agents, RAG systems—using a visual drag-and-drop interface and natural-language prompts. Whether you're a developer, product manager, or educator, Dify.ai streamlines end-to-end AI app workflows from prototyping to production.

Key Highlights of Dify.ai

- Visual workflow canvas for multi-step LLM pipelines

- Native RAG support: ingest docs, PDFs, vector search

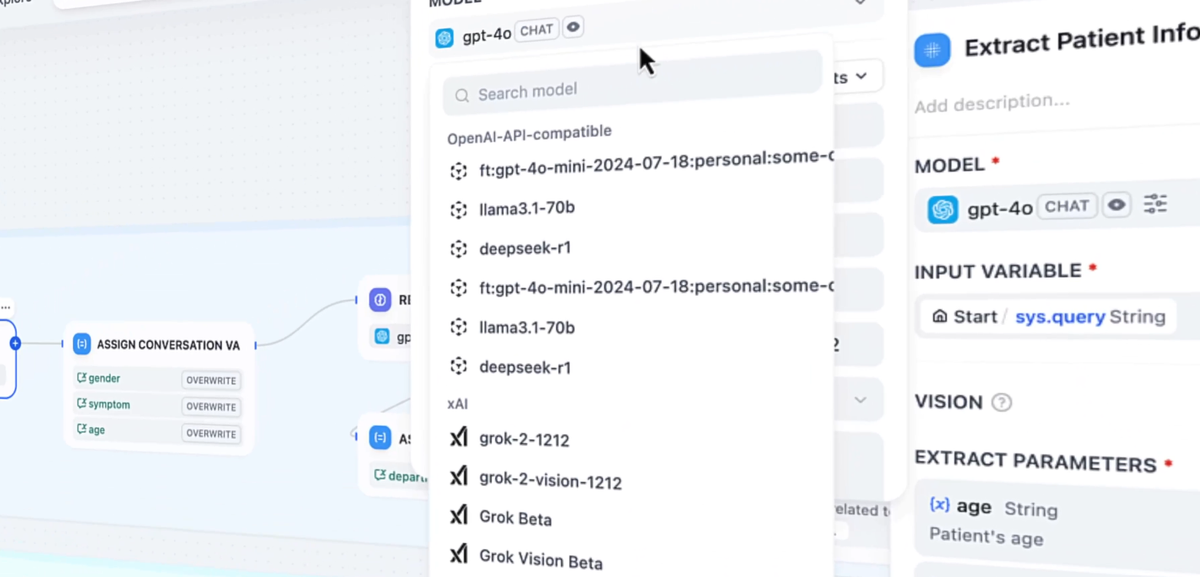

- Multi-model support: OpenAI, Anthropic, Llama2, HF, Replicate

- Plugin ecosystem and custom code nodes (Python/Node)

- Self-hosted and cloud options; sandbox tier free

- Annotation-driven feedback and observability tools

Key Features of Dify.ai

- Drag‑and‑drop canvas: visually build workflows with conditionals, tools, and agents

- Prompt IDE: test, debug, and optimize prompt logic inline

- RAG pipeline: document ingestion, vector storage, intelligent retrieval

- Multi-LLM support: swap between providers or self-host models seamlessly

- Custom code & APIs: add HTTP/Code nodes for advanced logic

- Observability: built-in logs, annotations, cost tracking

- BaaS capabilities: auto-generated REST endpoints, auth, deploy-ready APIs

- Open-source & self-hostable: run locally via Docker or AWS

Limitations of Dify.ai

- Steep learning curve if you're non-technical

- Self-hosted build lacks cloud-only features

- Workflow version control and collaboration still evolving :contentReference[oaicite:15]{index=15}

- Plugin marketplace is in beta

- Complex debugging can be manual

Use Cases for Dify.ai

- Developers & AI Engineers: Build chatbots, data pipelines, or AI agents without hand-coding orchestration.

- Product Managers: Prototype intelligent features, gather user feedback, iterate quickly.

- Data Analysts: Create natural-language SQL tools, automate report summaries.

- Customer Support Teams: Deploy RAG chatbots on manuals and ticket data.

- Content Marketers: Generate ad copy, content summaries, script assistants.

- Enterprise IT: Host workflows securely, maintain compliance in self-hosted mode.

- Educators & Researchers: Demonstrate prompt engineering, build Q&A agents on documents.

- Consultants & Agencies: Deliver client-facing AI tools with no-code flexibility.

How I’m Using It

I needed a smarter assistant to summarize weekly code reviews and highlight issues. Here’s my process:

- I upload commit diffs into a RAG node.

- I add two parallel LLM nodes—one for summary, another for potential issues.

- I merge results in a Template node and wrap it in a Code node to format into a report.

- I deploy as an API and call it each week from my Slack bot.

I went from idea to working assistant in under 48 hours. Now, the team gets a digest with actionable insights—and I can iterate by tweaking workflows or adding tools without rewriting code.

Pros / Cons

- Pros: visual orchestration, RAG built-in, multi-model support, open-source, deployment-ready, observability

- Cons: can be complex to start, collaboration features nascent, debugging may require manual steps

Frequently Asked Questions

- Can I self-host Dify for free?

Yes, the Community Edition is open-source and can be self-hosted without limits. - What’s included in the free sandbox?

200 message credits, 50 MB document storage, 5 apps, and 30-day logs. - Which LLMs are supported?

OpenAI, Anthropic, Azure, Hugging Face, Replicate, Llama2, and local models. - Does it support RAG pipelines?

Yes—includes ingestion, vector storage, retrieval, and integrated response nodes. - Can I add custom tools?

Yes, via HTTP or custom code nodes; plugin marketplace is also available in beta.

Pricing

Dify Cloud’s free Sandbox tier is ideal to start—200 message credits, 50 MB storage, and basic app limits. From there, Professional is $59/month and Team is $159/month with expanded credits, storage, team sizes, and priority support. Self-hosted edition is free.

Explore what you can build with Dify.ai

AI Score

| Criteria | Score/10 |

|---|---|

| Ease of Use | 8 |

| Feature Completeness | 9 |

| Model Flexibility | 9 |

| RAG Integration | 9 |

| Customization | 8 |

| Deployment Options | 8 |

| Collaboration Tools | 7 |

| Value for Money | 9 |

| Overall | 8.4 |

Alternatives

- LangChain – Code-first framework for LLM pipelines.

- LangFlow – Visual flow builder for prompt orchestration.

- Zapier AI – No-code AI workflows with app integrations.

- Replit AI – In-browser IDE with AI-assisted coding.

- Hugging Face Spaces – Host AI demos with Gradio/Streamlit.

- Vertex AI – Managed LLMOps on Google Cloud.

- OpenAI Functions – GPT agents with function calling.

- Weaviate – Vector DB with RAG and semantic search.

- AppSmith – Build internal tools with APIs and logic.

- Rasa X – Open-source chatbot builder for custom flows.

Last updated: July 4, 2025