Dynamiq Review 2025: Low-Code LLMOps Platform for AI Agents

Summary

Dynamiq is an enterprise-grade LLMOps platform that empowers teams to build, deploy, monitor, and fine-tune AI agents and workflows using a low-code, visual interface. With RAG support, guardrails, multi-model flexibility, and both cloud and on-premise deployment, Dynamiq simplifies GenAI application lifecycles from prototype to production.

Key Highlights of Dynamiq

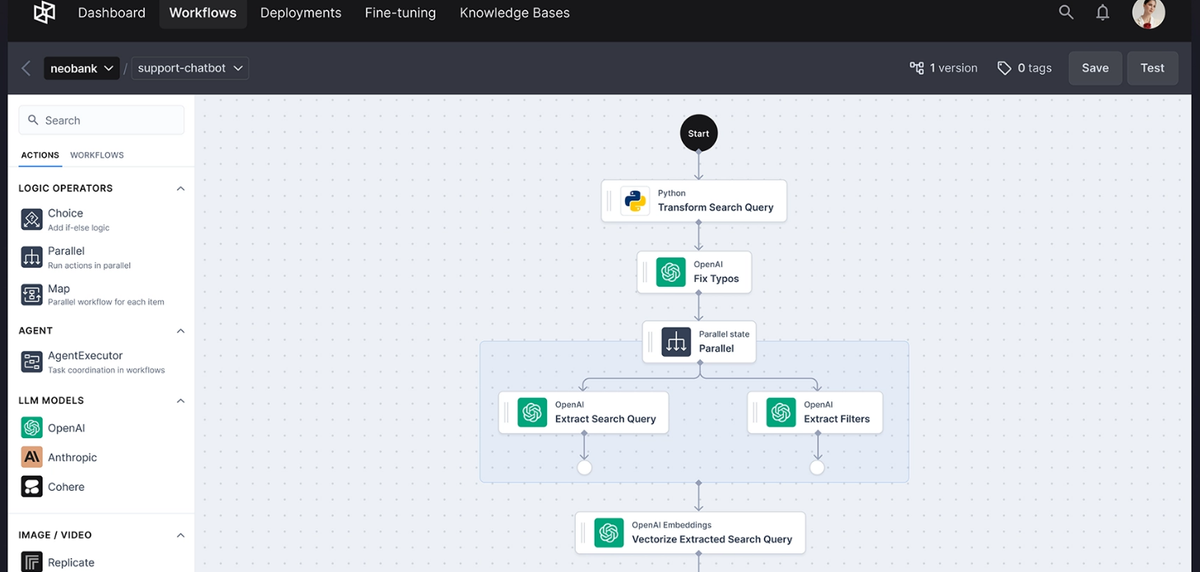

- Visual workflow builder for multi-step LLMOps pipelines

- Built-in RAG for document ingestion and vector search

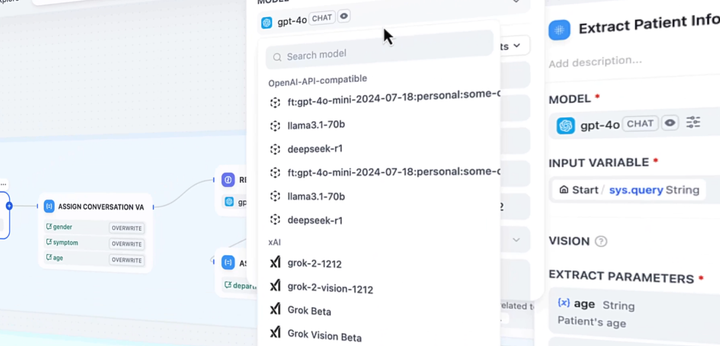

- Support for OpenAI, Anthropic, Llama2, Hugging Face, Gemini, and more

- Enterprise-grade guardrails, observability, compliance (SOC2, GDPR, HIPAA)

- Low-code nodes with Python support and multi-agent orchestration

- Flexible deployment: cloud, on-premise, hybrid, IBM/IBM Cloud catalog

Key Features of Dynamiq

- Drag-and-drop workflow canvas to visually compose LLM nodes, RAG, conditional logic and tools

- Prompt IDE for testing and optimizing prompts inline

- RAG ingestion for PDFs, docs, with vector database integration

- Multi-model support: integrate OpenAI, Anthropic, Gemini, Llama2, and local models

- Low-code & code nodes: add Python or API calls for custom logic

- Observability & logging: track usage metrics, costs, outputs

- Guardrails: ensure reliability, compliance, bias mitigation

- Fine-tuning: train open-source models on your own data

- Deployment flexibility: cloud, hybrid, on-premise, VPC & IBM Cloud options

Limitations of Dynamiq

- Steep learning curve for non-technical users

- Cloud-only features limited in self-hosted editions

- Collaboration and version control still maturing

- Plugin marketplace currently in beta

- Complex workflows require manual debugging

Use Cases for Dynamiq

- Developers & AI Engineers: Rapidly prototype LLM-powered agents and RAG workflows.

- Product Managers: Design and test AI assistants without deep engineering skills.

- Data Analysts: Build natural-language data summarizers and SQL generators.

- Customer Support: Deploy RAG bots over documentation and ticket histories.

- Enterprise IT & Ops: Host workflows in secure, compliant environments.

- Finance & Healthcare Teams: Build compliant AI agents for sensitive data tasks.

- Consultants & Agencies: Deliver turnkey AI apps for clients with simple visual flows.

- Educators & Researchers: Teach LLMOps, prompt engineering, and AI lifecycle management.

How I’m Using It

When I first logged into Dynamiq, I wanted an AI assistant to summarize my weekly sprint reviews. Here’s the journey:

- I uploaded commit logs and meeting notes into a RAG node.

- I connected an LLM node to generate summaries and another to detect potential blockers.

- I added a Python code node to format results into Slack-ready messages.

- I deployed the flow and scheduled it to run weekly, posting updates to my team.

Within two days I went from idea to reliable AI agent—no infra setup, full visibility in logs, and the ability to refine prompts over time. It saved hours each week and kept my team aligned effortlessly.

Pros / Cons

- Pros: Intuitive low-code canvas, built-in RAG, multi-model flexibility, enterprise compliance, fine-tuning, wide deployment options.

- Cons: Learning curve, limited self-host cloud features, evolving collaboration tools, manual debugging on complex flows.

Frequently Asked Questions

- Can I self-host Dynamiq?

Yes — the Community Edition is open-source and supports self-hosting via Docker or on-premise deployment. - What does the free plan include?

Cloud free tier includes 1 user, 1 workflow, 1 RAG KB, and 1,000 executions/month. - Which LLMs are supported?

Dynamiq integrates with OpenAI, Anthropic, Google's Gemini, Hugging Face models, Replicate, Llama2 and local setups. - Can I deploy on-premise or hybrid?

Yes — supports on-premise, hybrid, cloud-native, VPC, AWS, IBM Cloud, and IBM watsonx. - Does Dynamiq support fine-tuning?

Yes — two-click fine-tuning for open-source LLMs using your data.

Pricing

Dynamiq Cloud offers a free tier with support for 1 user, 1 workflow, and 1 RAG KB (up to 1,000 executions/month). Paid plans start at $29/month (Solo), scaling to $975/month for Growth and customizable enterprise packages. Open-source self-host is free.

Curious how fast you can launch an AI agent?

AI Score

| Criteria | Score /10 |

|---|---|

| Ease of Use | 8 |

| Workflow Completeness | 9 |

| Model Flexibility | 9 |

| RAG Integration | 9 |

| Compliance & Security | 9 |

| Deployment Options | 8 |

| Collaboration Features | 7 |

| Value for Money | 9 |

| Overall | 8.5 |

Alternatives

- LangChain – Code-first framework for building LLM pipelines.

- LangFlow – Visual prompt orchestration UI.

- Zapier AI – No-code AI workflows with app integrations.

- Replit AI – Browser IDE with AI assistant support.

- Hugging Face Spaces – Host AI demos using Gradio or Streamlit.

- Vertex AI – Google-managed platform for building AI pipelines.

- OpenAI Functions – GPT function-calling orchestration tool.

- Weaviate – Vector database with built-in RAG.

- AppSmith – Build internal tools via APIs and logic.

- Rasa X – Open-source platform for customizable chatbots.

Last updated: July 3, 2025